| Revision History | |

|---|---|

| Revision 0.5.4 | 2015/04/05 |

Table of Contents

This document is under re-construction - beware! |

This document’s goal is to describe the software architecture of the Hibari key-value database, discuss parts of its implementation, and to document the Hibari client APIs.

At a minimum, application developers need to know how to use the various Hibari client APIs. Hibari is a key-value database, which almost by definition have a small API. Learning the basics isn’t too difficult. To be really effective, however, application developers also need to have a good overall understanding of how Hibari works.

For developers interested in working on Hibari itself, the source code is the ultimate documentation. But like most software developed in an industrial setting, Hibari grew at times quite quickly and at times sat on the shelf, waiting for customer demand. Anyone who works with the software, including Hibari’s original developers, need documentation to understand not only how something works but also why certain choices were made … and what parts need more work.

Discussion of Hibari’s implementation

- The audience for this section is developers who wish to understand Hibari’s implementation at a deeper level and add new features, fix bugs, or just generally tinker with and experiment on the system.

To avoid a lot of cut-and-paste text, many of the operational details that a developer should know or must know about Hibari are found in the Hibari System Administrator’s Guide. This document assumes that a developer has already skimmed the System Administrator’s Guide and is willing to jump over to that guide when necessary. |

Copyright © 2005-2014 Hibari developers. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.It would be wonderful to say that Hibari sprang from someone’s forehead, fully formed and adult, like the goddess Athena’s birth from the forehead of Zeus. Software development is usually a bit more organic and unplanned than that. Hibari is no exception.

Once upon a time, in a galaxy far, far away… Hibari started as a small, focused replacement for Mnesia, the database bundled with Erlang/OTP. Cloudian, Inc. (formerly Gemini Mobile Technologies) was bidding on a project that required an extremely high throughput database, with high availability and data durability guarantees for a workload with a very low read/write ratio (i.e. very write-intensive). The amount of money dedicated to hardware was fixed. Mnesia could do the job very well, except for the throughput. Cloudian needed something both faster and simpler. The skeleton of Hibari was written in haste, in case Cloudian got the contract.

Fortunately, Cloudian lost the bid for the contract. Hibari sat on the shelf for a while, then picked up and developed as a main memory database, like Mnesia. Then requirements changed. Then a project was canceled, and Hibari set aside. Then picked up and set aside again. Each time, requirements changed.

Hindsight is perfect. This section will attempt to give the reader, developers who are maintaining Hibari or adding new features, some background for why the code is structured the way it is. The APIs for some modules are straightforward to use, and others are not so clear. Some modules were written together in a brief period of time, and other evolved slowly over several years.

- Server (aka the brick)

- Chain replication

- Consistent hashing

- Client

- Admin Server

- Miscellaneous

As described in the Hibari Sysadmin Guide, "Bricks outside of chain replication" section, a Hibari logical brick can be used with or without chain replication. When used without chain replication, each brick is a standalone data storage entity. Any replication of data across logical bricks must be done by the client, typically by a “quorum replication” technique.

The source modules that implement the logical brick are divided into two groups:

- Write-ahead logging, aka disk persistence

The following modules maintain the write-ahead logs on disk. See the Hibari Sysadmin Guide, "Write-Ahead Logs" section for a description of the two types of write-ahead log and how they interact with each other.

-

gmt_hlog.erl -

gmt_hlog_common.erl -

gmt_hlog_local.erl

-

- Protocol service and in-memory data management

The following modules handle Hibari client requests and manage the in-core binary trees used for key management:

-

brick_ets.erl -

brick_server.erl

-

The chain replication algorithm is implemented in brick_server.erl.

That module also contains server and client code, which helps explain

why it’s the largest source module in the Hibari application.

The consistent hashing algorithm is implemented in the

brick_simple.erl module. The code in this module has two roles:

-

The "gen_server" callbacks for the

brick_simpleregistered process that runs on each brick node. This server receives updates from the Admin Server when there are changes to chain membership. - Client-side stub functions, executed by a Hibari client application, to implement the client API that uses consistent hashing.

Hibari clients fall into two categories: those that use consistent hashing and those that do not.

-

As described in the section called “Consistent hashing modules”, the

brick_simple.erlmodule implements the client API that uses consistent hashing. -

The

brick_server.erlmodule implements the low-level client API that is not aware of consistent hashing. -

The

brick_squorum.erlmodule is a partial implementation of a “quorum replication” method for managing data consistency across multiple logical bricks. This module is used only by the Admin Server and is tailored to the Admin Server’s use. It should not be used by other quorum replication-based applications.

See Hibari Sysadmin Guide, "The Admin Server Application" section for a description of the various services provided by the Admin Server application.

-

brick_admin.erlprovides the major external API to most of the Admin Server’s function as well as basic table management functions. -

brick_bp.erlimplements the “brick pinger” processes. Each pinger process is responsible for monitoring the health of a single Hibari logical brick. -

brick_chainmon.erlimplements the “chain monitor” processes. Each chain monitor is responsible for monitoring the status of a single Hibari chain and to reconfigure the chain safely as its member bricks crash and restart. -

brick_migmon.erlimplements the server process that is responsible for monitoring data migrations that take place whenever chains are added, deleted, or reweighted. -

brick_sb.erlimplements the “scoreboard” process, which provides historical data about each brick and chain state transition.

The the section called “Admin Server modules” indirectly outlines many of the processes that, when grouped together, form the Hibari Admin Server application:

- The main Admin Server process.

- Many “brick pinger” processes

- Many “chain monitor” processes

- The “migration monitor” process

- The “scoreboard” process

- … and several other long- and short-lived processes, discussed in xref:module-by-module-commentary.

Acting together, these processes maintain data consistency for all bricks in a Hibari cluster. However, individual processes can crash and restart at unpredictable times. The Admin Server must be able to recover correctly from a failure of any number of its helper processes, regardless of timing.

The Admin Server implementation was written for correctness first and for speed/efficiency only when necessary. It has been used in production environments with rough total of 2,000 logical brick pinger and chain monitor processes. At this scale, the implementation shows signs of stress under the worst-case scenario of "Restart the Admin Server and all logical bricks on all physical bricks simultaneously", but it works none-the-less. |

The architecture decision to have one Admin Server process, one scoreboard process, one pinger process per brick and one health monitor per chain is quite intentional. If there is only one at a given time performing a given task, then there cannot be race conditions.

The modules in this section appear in alphabetical order. For an overview of their use by functional category, see Section 2.1, “Major subsystems”.

This module provides the Erlang/OTP "application" behavior for the

Hibari application. Such modules are usually quite small. The reason

why brick.erl doesn’t fit the small pattern is that it has some

extra logic to shutdown logical bricks in a particular order when

application shutdown has been requested.

Please see Hibari Sysadmin Guide, "The Admin Server Application" section for a description of the Admin Server’s various functions.

The Admin Server has a "schema", though perhaps that’s a poor choice of name. The schema defines:

- Each Hibari table

-

The consistent hashing

{TableName, Key}→ chain mapping - Status of any data migrations

The schema, together with the “scoreboard” operational history, is stored in the “bootstrap bricks”. See Hibari Sysadmin Guide, "Admin Server’s Private State: the Bootstrap Bricks" section and Hibari Sysadmin Guide, "Bricks outside of chain replication" section for more details.

Functions for creating a new schema and defining the names of the

bootstrap bricks that will store the schema are in this module. So

are assorted functions for querying the schema, such as

brick_admin:get_tables/0 and brick_admin:get_table_chain_list/2.

Changes to chain length and chain addition/deletion/reweighting are

also available here.

When a chain health monitor process makes a major state transition, it

will notify the Admin Server of the change. The Admin Server will

then broadcast, or "spam" status notifications to all server and

client nodes. The data structure spammed is a #g_hash_r record, or

simply a "global hash record". This record is maintained by the admin

server per table. Each table has its own unique global hash record.

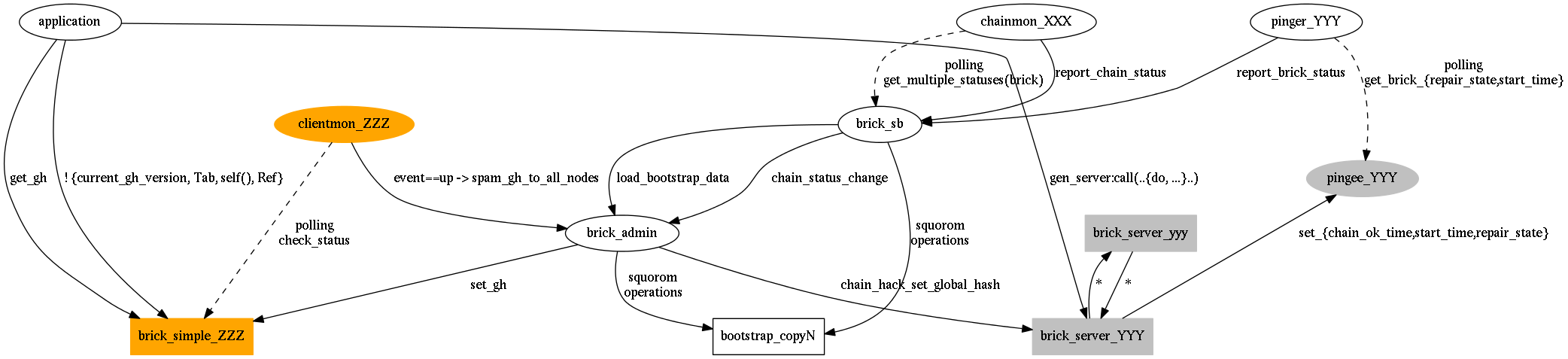

Among other things, the global hash contains the bricks and their roles in each chain. In this manner, a gdss client can know which chain a key belongs to and what brick in that chain is acting as head or tail. In this manner they can talk directly to the correct brick for a given operation. |

The admin server increments a minor revision number in the global hash for each update. Bricks and clients can then compare this revision number to what they already have to ensure that they don’t revert to using an older global hash. |

The brick_admin:fast_sync() family of functions are an attempt to

help Hibari cluster administrators to perform bulk-copies of data from

in-service bricks to bricks that have zero data. For example,

consider a physical brick has crashed due to data loss caused by a

hard disk failure. The crashed brick can be put back into service

after fixing the disk problem, but the brick’s data has been lost.

Chain replication can create new replicas of the lost data, but the

chain replication implementation can create a lot of disk I/O on the

upstream brick for long periods of time, e.g. several days for

terabytes of data. The fast_sync() function attempts to minimize

the amount of random disk I/O by copying keys & values in file+offset

sorted order.

- A "gen_server" for the Admin Server main process.

- The “bootstrap scan” process, which scans all keys in the bootstrap bricks every 5 seconds and repairs any inconsistencies created by crashing & restarting bootstrap bricks and/or a crash of the Admin Server itself.

-

Each request to "spam" a new global hash record to all servers and

clients will spawn a process to send the new global hash to all

server nodes and then all

brick_simpleservers on all nodes. - The "fast sync" bulk data copy API will spawn a process to coordinate the bulk data copy activities.

The Admin Server registers the brick_admin_event_h.erl as an event

handler with the partition detector application. It’s

handle_event() function takes action for two kinds of events:

-

If a network heartbeat alarm is set, an app log message is

generated. If the alarm is on the A network, that node is

forcibly disconnected from the VM’s

net_kernelservices. - If another Admin Server instance is detected, the Hibari application will be stopped and the VM halted.

This is the supervisor for Admin Server-related processes. If the Admin Server application is not running, this supervisor will have no children to monitor.

A careful reader will notice that the preceding paragraph

contains a contradiction. The Hibari The contradiction is: the Admin Server’s processes are supervised by another application’s supervisor. |

The simple answer is: the Admin Server is not a 100% OTP-compliant application.

The complicated answer is: it is complicated. There’s so much day-to-day developer activity that relies on the Admin Server that it’s really inconvenient to package the Admin Server as a 100% OTP-compliant application. It’s much, much more convenient to have its source code and its processes mixed in with the rest of the Hibari server code and processes.

So, the Admin Server application is OTP-compliant enough to be managed

by the OTP application controller. But it is conveniently managed,

source-wise and process-wise, within the Hibari/gdss application as

a whole.

This module implements the “brick pinger” server: a "gen_fsm" process that is responsible for polling the health of a single logical brick. The Admin Server starts a "brick pinger" process for each logical brick in each chain in every table. See the Hibari Sysadmin Guide, "Brick Lifecycle Finite State Machine" section for a description of the state machine implemented by this module.

A 1-second periodic timer is used to check the status of the brick that this FSM monitors. Any major changes in status will be sent as a proplist to the "scoreboard" proc (see the section called “brick_chainmon.erl”) .

Health polling is based on two attributes: brick repair state and brick repair time. Due to the polling nature of this interface (a weakness, see the "NOTE" section above), it’s possible that a logical brick in certain repair states X could crash, restart, and reenter the state X before the "pinger" polled again. The logical brick’s start time is checked to make certain that the "pinger" is talking to the same PID over time.

-

A long-lived process starts running the

brick_monitor_simple()function, which creates a monitor to the remote logical brick and then waits for a{'DOWN', ...}message from that monitor. - When an illegal state transition has been detected, a short-lived function is spawned to kill the remote node before it does something even more illegal than it already has.

This is the direct supervisor for all logical bricks that run on this

node. The brick_shepherd.erl module provides the interface used to

request that this supervisor start & stop a logical brick.

This module implements the “chain monitor” server: a "gen_fsm" process that is responsible for monitoring the health of all logical bricks within a single chain. The Admin Server starts a "chain monitor" for each chain in every table. See the Hibari Sysadmin Guide, "Chain Lifecycle Finite State Machine" section for a description of the state machine implemented by this module.

See the the section called “brick_bp.erl” for an overview of the polling method used by both the brick health "pinger" processes, the "chain monitor" processes, and the "scoreboard" and that method’s known limitations.

In addition to monitoring health, the chain monitor proc is responsible for taking actions required to repair the chain.

Chain member status is tricky to calculate correctly. Any chain monitor process may crash at any time (e.g. due to bugs), or the entire machine hosting the monitor may crash. When the monitor has restarted, it doesn’t know how many times chain members may have changed state.

Each monitor queries the "scoreboard" to get the current status of

each chain member. (Remember: The scoreboard’s info may be slightly

out-of-date!) Each current status is compared with the monitor’s

in-memory history of the status during the last check. (All bricks

start in the unknown state.) If there’s a difference, then suitable

action is taken.

Also, any change in brick or chain status is also reported to the scoreboard.

Each brick’s scoreboard status is converted to an internal status:

-

unknown - The brick’s status is not known.

-

disk_error - The brick has hit a disk checksum error and has not been able to initialize itself 100%.

-

pre_init - The brick is running and ping’able, but the brick is not in service, and the state of the brick’s local storage is unknown.

-

repairing - The monitor has chosen this brick to be the next brick to resume service in the chain. Its local storage is actively being repaired by chain’s current tail.

-

repair_overload - If a brick was in ‘repairing’ state and was determined to be overloaded (usually by too much disk I/O), the node can be switched to this state to halt repair.

-

ok - The brick is fully in-sync with the rest of the chain and is in service in its correct chain role.

There are times when the chain monitor detects a chain that has zero

running bricks. It must then examine the operational history of all

bricks in the chain and determine which is the best brick to start

first. This must be the last brick to crash. The chain monitor will

wait forever for that "best brick" to start. If it is impossible to

start (for example, the machine was destroyed by fire, or data was

lost due to a disk failure), then a human may use the

force_best_first_brick() function to give permission to the chain

monitor to start another brick as the chain’s first brick.

There are times when a chain monitor restarts and discovers that some or all bricks in the chain are running. However, the new chain monitor cannot know exactly what roles each brick was in without polling each one … and because a chain transition may have been interrupted by a chain monitor crash, it is quite tricky to make correct decisions about what running bricks are OK and which ones are not.

The uncertainty of each brick’s exact status could be addressed by

more logging of intermediate states to the Admin Server’s private

state (i.e. stored in the bootstrap_copy* bricks). But each write

to that private state has an overhead that, when multiplied by

thousands of bricks and hundreds of chains, is quite significant.

When a chain monitor starts, it attempts to calculate the chain’s

status. If the chain was healthy before the monitor crash, the chain

will be deemed healthy. If the chain was degraded, then it’s

likely that the chain will be "whittled down" to a single brick and

then reconstructed using the chain repair protocol.

The process_brickstatus_diffs() function is a long, long bear of a

function. Refactoring it would be useful, even necessary if the

polling-based mechanism were changed (as suggested elsewhere). But it

encodes a lot of hard-won knowledge of how to maintain data

consistency under really weird, hard-to-find and hard-to-fix bugs over

more than two years of testing and production use.

Read the code and the comments before embarking on any refactoring journey. |

This module implements the callbacks for the OTP application

cluster_info, bundled with Hibari. Functions such as

cluster_info:dump_all_connected/1 can be used to write a huge amount

of diagnostic information about the cluster.

At startup time, both the gdss and gdss_client applications

register a callback with the cluster_info application.

This is the start/stop module for the gdss_client application. If

the gdss application is not running on each node that a Hibari

client application runs, then the lighter-weight gdss_client

application must be running.

This process implements a "gen_server" that monitor the condition of

each client node that is monitored by the Admin Server. See the

brick_admin:run_client_monitor_procs() function for the definition

of the funs used when the client monitor sense that a client node has

stopped or started.

This is the gdss application supervisor that is responsible for

supervising all processes related to logical bricks:

- The "common log" write-ahead log

- The actual logical brick supervisor

- The brick "shepherd" to start & stop logical bricks

-

The "simple" table state server (to support for the Hibari client

brick_simple.erlAPI. - The brick mailbox monitor

- The checkpoint I/O throttle server

- The brick "primer" throttle server

In an ideal world, brick_ets.erl would be a flexible plug-in module

to implement the data store for a Hibari logical brick. With regard

to management of in-memory data structures (i.e. ETS ordered_set

tables, which are implemented as balanced binary trees), this module

is mostly self-contained. For disk-based persistence, it uses the

write-ahead log modules gmt_hlog.erl, gmt_hlog_common.erl, and

gmt_hlog_local.erl) to handle disk I/O-related activity.

In the real world, brick_ets.erl is a mongrel, a mix of various

tasks: some memory related, some disk related, and some other stuff.

A "pluggable storage system" was not part of Hibari’s original design.

The original design called for two kinds of bricks, with two separate

implementations: one RAM-based and one disk-based. The RAM-based one

was written first: it was the original brick_ets.erl module, to be

used as part of a quorum-style replication system. The disk-based

module was delayed.

Then Hibari development stopped. Then it restarted, but this time

needing to meet larger-than-RAM storage requirements and to use chain

replication (instead of quorum replication). The decision was made to

split the brick_ets.erl module was several pieces:

-

brick_ets.erlwould maintain the RAM-based data structures -

gmt_hlog.erlwould maintain the disk-based write-ahead log -

brick_server.erlwould maintain chain replication & repair logic

A big legacy of the original, everything-in-brick_ets.erl

implementation are the functions with names prefixed by "bcb_". "BCB"

= "Brick CallBack". These functions are required by

brick_server.erl for various purposes that also need access to the

ETS tables managed by brick_ets.erl.

Originally, the principal process for a logical brick was a

"gen_server" behavior process that was implemented by

brick_ets.erl. When the brick_ets.erl module was split apart, the

choice was made to do the following:

-

The "gen_server" process would use

brick_server.erlas its implementation module and use its own#staterecord which is _completely independent of thebrick_ets.erl#staterecord. -

Keep the "gen_server" behavior callbacks in

brick_ets.erl -

Use a layer of indirection to allow

brick_server.erlcode manage the behavior callbacks and#stateofbrick_ets.erl

This choice complicates |

The #state record used by brick_ets.erl has members in several

major categories:

-

Major configuration items, e.g.

do_loggingandbigdata_dir -

Operation counts, e.g.

n_addandsyncsum_count -

Log management, e.g.

logging_op_serialandlog -

Checkpoint management, e.g.

check_pid -

ETS tables, e.g.

ctabandshadowtab -

Dirty key management, e.g.

dirty_tabandwait_on_dirty_q

-

#state.ctab, the contents table. Except for changes made during a checkpoint, all data about a key lives in this table as a "store tuple" (see below). -

#state.dirty_tab, the dirty table. If a key has been updated but not yet flushed to disk, the key appears here. Necessary for any update, inside or outside of a micro-transaction, where race conditions are possible. See the section called “The dirty keys table”. -

#state.etab, the expiry table. If a key has a non-zero expiry time associated with it (an integer in UNIXtime_tform), then the expiry time appears in this table. -

#state.mdtab, the brick private metadata table. Used for private state management during data migration and other tasks. -

#state.shadowtab, the shadow table. During checkpoints, the#state.ctabtable is frozen while the checkpoint process dumps its contents. All updates made while the checkpoint is running (insert or delete) are stored in this table. When the checkpoint is finished, the contents of this table are applied to the contents table, and then the shadow table is deleted.

A "store tuple" is the internal representation of a key’s metadata.

It uses a variable-sized tuple to try to save some memory, avoiding

storing common values. This is the tuple that is stored in the

#state.ctab table.

| Element 1 | Element 2 | Element 3 | Element 4 | Element 5 | Element 6 |

|---|---|---|---|---|---|

|

|

|

| ||

|

|

|

|

| |

|

|

|

|

| |

|

|

|

|

|

|

Types used:

-

Key = binary() -

TStamp = integer() Value = binary() | {integer(), integer()}-

For value blob storage in RAM,

Value = binary() -

For value blob storage on disk,

Value = {FileNumber::integer(), Offset::integer()}whereFileNumberandOffsetgive the starting location for the write-ahead log "hunk" that stores the actual value blob.

-

For value blob storage in RAM,

-

ValueLen = integer() -

ExpTime = integer() -

Flags = list()

The time required for full initialization of a logical brick is not predictable. We cannot know in advance how much metadata must be read from the brick’s private write-ahead log, nor do we know how much time it will take to read that data.

The OTP "supervisor" behavior places a limit on how long a worker process’s init() function can take, and while a supervisor is starting a worker process, it is blocked from starting/restarting/stopping other workers. Therefore, it’s very important that the logical brick initialization function execute in a short amount of time.

The second-to-last statement in brick_ets:init/1 is this:

self() ! do_init_second_half,

Then the handle_info/3 callback can take as much time as is

necessary to read & process the updates in the private write-ahead

log.

Here are some examples of why the dirty keys table,

#state.dirty_tab, is used (not an exhaustive list):

-

To prevent more than one

addoperations succeeding. If keyKdoes not exist, and if multiple clients race toadd(Table, K, Value), then only one client should succeed. -

To prevent more than one update operation from succeeding with the same

{testset,CurrentTimeStamp}flag. -

To prevent more than one micro-transaction from committing when

using exclusive operations (e.g.

replace) and/or exclusive flags (e.g.{testset,CurrentTimeStamp}andkey_must_exist).

A Hibari micro-transaction is designed to avoid holding locks by forcing the client to send the entire micro-transaction in a single message. The server brick’s gen_server should immediately be able to enforce the above properties, correct? Yes and no, unfortunately.

Because each logical brick is implemented as a single "gen_server" process (we ignore the helper processes enumerated in the section called “Processes created by brick_ets.erl”), all messages processed by the brick are automatically serialized. That serialization property makes it much easier to implement immediate commit/abort decisions. However, there’s a small problem: disk I/O is slow. Here is one example of a race condition that is caused by slow disk I/O:

-

Assume key

Kdoes not exist. -

Client X sends

add(Table, K, Value1)to brick B. -

Brick B receives the

addop. The keyKdoes not exist, so the operation is permitted. - Brick B writes an insert record into its private write-ahead log and requests a file sync.

- Brick B is told that the file sync has not yet finished and therefore cannot send a reply to Client X yet.

-

Client Y sends

add(Table, K, Value2)to brick B. -

Brick B receives the

addop. The keyKdoes not exist, so the operation is permitted. Although keyKwas added in step #4, that operation’s write-ahead log has not yet been flushed safely to disk, so therefore Brick B cannot yet guarantee that the key does exist. - Brick B writes an insert record into its private write-ahead log and requests a file sync.

- Brick B is told that the file sync has not yet finished and therefore cannot send a reply to Client Y yet.

-

Brick B is informed asynchronously that the flush of step #3’s

operation is finished. B sends a reply to Client X of

ok. -

Brick B is informed asynchronously that the flush of step #7’s

operation is finished. B sends a reply to Client Y of

ok. This reply violates the principle of strong consistency and is therefore incorrect.

Any key that is updated by an operation that is waiting for its

write-ahead log entry to be flushed to disk will have an entry in the

#state.dirty_tab ETS table. When the fsync(2) system call is

finished, the key will be removed from #state.dirty_tab and the

#state.ctab (or the "shadow table", if a checkpoint is in progress)

will be updated to make the key’s update visible.

If a Hibari client calls do when the first op in the DoList is the

atom txn, then the DoList will be evaluated as a micro-transaction.

The check is done by do_do2/3, with micro-transaction preconditions

checked by do_txnlist/3.

If do_txnlist/3 detects that a micro-transaction precondition has

been violated, e.g. add a key that already exists, then an error

accumulator is built to inform the client of which items in DoList

failed. Note that the txn op is removed from the DoList before

do_txnlist/3 starts.

If do_txnlist/3 finds no errors in the micro-transaction, then the

DoList is then executed by the same function that processes

non-micro-transaction lists, do_dolist/4.

The management of the write-ahead log and maintaining strong consistency was a more difficult problem than I had first realized. To preserve strong consistency, the order of all updates must be preserved when writing log entries to the write-ahead log. Updates come from two sources:

- Client requests

-

Chain replication messages, e.g. a log replay message from a brick’s

immediate upstream neighbor. (NOTE: These messages are handled by

the

brick_server.erlmodule, see the section called “brick_server.erl”.)

The brick maintains a monotonically-increasing counter,

#state.logging_op_serial, to assign a serial number to each update.

Each update is written in increasing serial number order. After an

update is written, the brick will request an fsync(2) system call on

the log. The write-ahead log manager will initiate the call (if no

fsync(2) call is currently in progress) or queue the request for a

later time (because an fsync(2) system call is in progress already).

Because the brick does not know when the fsync(2) system call will

finish, the brick stores the operation and its serial number in a

queue called #state.logging_op_q.

The write-ahead log manager will notify the brick when an fsync(2)

system call is finished, telling the brick the largest serial number

N. The brick will remove all pending requests from the

#state.logging_op_q that have serial numbers less than or equal to

serial N. Processing of those pending requests is then resumed.

As described above, the "syncpid"'s job is pretty simple:

-

Collect requests for an

fsync(2)call. (Each request is tagged with a log sequence number.) -

Now and then, start a

fsync(2)call via thegmt_hlog_local.erlAPI. -

When the call is finished, notify the brick of the largest log

sequence number serviced by the completed

fsync(2)call.

The tricky part is step #2, specifically, when should "now and then" be? There are a couple of easy answers to the question:

Initiate an

fsync(2)call whenever a single request in step #1 arrives. Block all otherfsync(2)requests until this one finishes.- Both throughput and latency under high load are quite poor.

Collect requests in step #1 for a fixed amount of time, e.g. 100 milliseconds, then start

fsync(2).- Throughput under high load is very good, but latency under light loads is very high.

The current implementation, in collect_sync_requests/3, uses a

variable amount of time in step #1 by waiting a maximum of 5

milliseconds since the last fsync request before going to step #2.

The method is virtuous by being simple and for being "good enough" for

both very low and very high load conditions.

As explained in the section called “The "store tuple"”, when a value blob is stored on

disk, its store tuple representation is {FileNumber::integer(),

Offset::integer()}. These two integers are used to find the value

blob’s storage location on disk. See the section called “gmt_hlog.erl” for API

details.

A brick’s behavior for value storage is defined by the value of

#state.bigdata_dir:

-

If

undefined, then values are stored in RAM, i.e. as an Erlang binary within the store tuple. -

If not

undefined, then values are stored on disk.

When a key is set by a set/add/replace operation in a table that

stores value blobs on disk, there are actually two hunks written to

the brick’s write-ahead log:

- The value blob itself is written in a hunk first.

-

Then the brick’s metadata hunk is written second. This hunk

contains the store tuple for this key and therefore contains the

{FileNumber,Offset}tuple for the location of the value blob hunk stored in step #1.

To retrieve a hunk, the gmt_hlog API is used, passing the

FileNumber and Offset as arguments. The library function then:

-

Converts

FileNumberto a full file path for the log sequence file. -

Opens the file and seeks to offset

Offset - Reads the write-ahead log hunk header, which contains hunk metadata such as blob size and MD5 checksum.

Reads the hunk blob, which immediately follows the hunk header.

- If the client passes the blob size as an extra argument, the two reads are combined into a single read request.

The serial nature of message handling by the "gen_server" behavior is almost always a good thing. However, dealing with disk I/O is one of the few times when it would be really nice to have "gen_server" handle multiple messages in parallel.

It’s certainly possible to have "gen_server" handle multiple messages in parallel, but it’s the developer’s responsibility to juggle the asynchronous replies to clients. This can get very tricky very quickly. However, a logical brick must do this kind of juggling to minimize latency across all Hibari client requests.

The Erlang virtual machine does not expose an API to the OS’s

mmap(2) and mincore(2) system calls, so it is impossible for a

logical brick to know which parts of a file are in the page cache and

which are not. Without that knowledge, the brick cannot predict how

long it will take to open or read a log sequence file to retrieve a

value blob.

To make the unpredictable disk I/O pattern into something almost 100% predictable, Hibari bricks borrow a trick from the Squid HTTP Caching Proxy server and the Flash HTTP server. To avoid having computation threads blocked by disk I/O, both servers use a pool of OS processes or Pthreads whose sole job is to perform disk I/O. The model goes something like this:

- The main server process/thread wishes to read file X.

- The main server process/thread sends a request to the I/O worker pool to read X.

- A process (or thread) in the worker pool opens X, reads X’s data, and closes X.

- The worker process/thread notifies the main server thread that the read of X has finished, via a pipe file descriptor.

- The main server process/thread receives the completion message from step #4 via the other end of the pipe.

- Now the main server process/thread can open and read file X with almost 0% probability that it will be blocked by the OS: it’s almost 100% certain that all file system metadata and file data are now in the OS page cache and therefore the probability of blocking due to disk I/O is nearly zero.

The logical brick uses the same basic strategy, which I’ve called "squid/flash priming" or simply "priming", as in "priming a pump". A brick will spawn a short-lived Erlang process to read the log hunk, notify the main gen_server that the I/O is finished, and then the main gen_server process can open & read the file with virtual certainty that it will not be blocked by disk I/O.

Primer processes are used when reading data from a Hibari client get

or get_many request as well as when reading value blobs during brick

repair operations. Each has a separate throttle configuration

attribute.

There is a throttle mechanism to keep too many squid/flash

primer processes from executing simultaneously: the

|

There is plenty of opportunity for refactoring here. The current implementation has been "good and fast enough", but there’s almost certainly room for optimization, especially if very large blobs (greater than 4MB, approximately) are routinely used. Some possible optimizations would included:

|

There are two magic values that an Erlang client can use in a

set/add/replace operation in place of the usual binary or iolist

value blob.

-

?VALUE_REMAINS_CONSTANT -

The client can use this magic constant in an

set/add/replaceoperation to keep the value blob the same while changing other attributes of the key, such as expiration time or flags. -

?VALUE_SWITCHAROO - Use of this flag is limited to the scavenger only and should not be used by any other client. See the section called “The scavenger” for more.

These are some areas where logic that most likely should be

moved to |

- Migration sweep logic bleeding into get_many1()

- Chain replication into filter_mods_from_upstream()

- Repair-related stuff in repair_diff_round1()

The implementation of the contents table and the shadow table,

#state.ctab and #state.shadowtab respectively, causes some

problems. The biggest problem is that when a checkpoint is in

progress and the #state.shadowtab exists, then the "does the key

exist?" decision must consult both tables in a sane manner.

The get_many operation is most affected by the necessity to look in

both tables. The get_many_shadow() function implements the tricky

logic that’s required to combine the contents of both contents and

shadow tables into a consistent set of results.

Because |

By default, MD5 checksums are generated for all data written to all write-ahead logs, and those MD5 checksums are checked for all data read from write-ahead logs.

If the file "disable-md5" exists in the Hibari server data directory, then data will be written to write-ahead logs without MD5 checksums, and data read from write-ahead logs will not have MD5 checksums verified.

If the file "use-md5-bif" exists in the Hibari server data directory,

then the erlang:md5/1 function will be used to create MD5

checksums. By default, the crypto:md5/1 is used to create MD5

checksums.

If an MD5 checksum error is detected, the easy thing to do is crash

the brick. In practice, this approach causes some additional problems

that we would rather avoid. The logic is in bigdata_dir_get_val():

Mark the sequence file as bad. The assumption is that the entire log sequence file is bad. This assumption may or may not be true, but the default is to be conservative. We don’t know if there are other checksum errors within the file, so we will:

- Rename the log sequence file to that it cannot be accessed again.

- By not deleting the file, the bad data block(s) in it cannot be recycled and therefore contaminate data sometime in the future.

- We can examine the bad file at leisure to confirm that it is bad and find any other places where checksums have been corrupted.

- We delete all references to keys that depend on the corrupted log sequence file. Then we crash. Chain repair will repopulate the missing keys.

- Silently drop the entire query. The client will see a timeout eventually and have to retry (if it wishes).

The "scavenger" procedure is used to reclaim disk space in the "common log" that is no longer used by a local logical brick. It is essentially a copying garbage collector:

- It determines which hunks in all common log files are currently in use or not in use.

- It perhaps copies some hunks to new log sequence files.

- It perhaps deletes some log sequence files to reclaim disk space.

By default, the scavenger is run once every 24 hours at 03:00. |

Each write-ahead log is divided into log sequence files. Each log sequence files contains a sequence of "hunks". The hunks are put into one of two categories:

- A "live" hunk is still in use, i.e. there is a key which has a value blob pointer that points to this hunk.

- A "dead" hunk is not "alive": i.e. the key that originally had a value blob pointer to this hunk has since been changed or deleted.

The goal of the scavenger is to reclaim disk space. The only way to reclaim disk space is to delete files. If the scavenger finds a log sequence file with 0% live hunks, that file can be deleted immediately. However, it is quite rare to find a log sequence file that has 0% live hunks. For all other log sequence files, a different strategy is used:

-

Fetch the

brick_skip_live_percentage_greater_thanattribute fromcentral.conf. Call itSkipPercent. -

For each log sequence file, calculate the ratio of disk space used

by live hunks; call it

LivePercent. For each log sequence file where

LivePercentis less thanSkipPercent:- Copy all live hunks to a new log sequence file(s).

- Update the location pointers of those keys to point to the new storage locations.

- Delete the old log sequence file.

-

The long-lived "sync pid" process. This process is responsible for

combining, or "batching", multiple brick write operations into a

single

fsync(2)OS system call. Once anfsync(2)call has finished, the "sync pid" will send a message to the logical brick’s "gen_server" process to tell it what log items (identified by log serial number) have been flushed to disk and can be sent downstream and/or to the client. - The long-lived "local" write-ahead log process. Each logical brick has its own local write-ahead log, managed by its own local log process. This process works together with the "common log" write-ahead log and the "sync pid" to store brick updates safely to disk.

-

Short-lived "checkpoint" processes. When a brick’s local log has

grown larger than the

brick_check_checkpoint_max_mbconfiguration variable, a checkpoint process is spawned to perform the checkpoint task. See Hibari Sysadmin Guide, "Checkpoints" section for an overview of the checkpoint procedure. - Very short-lived processes to implement the data "priming" process, see above for description.

- A short-lived process to delete keys that have expired.

- Short-lived "scavenger processes, see the section called “The scavenger”.

The consistent hashing layer is the top-level of the layered abstraction of a Hibari storage cluster, as discussed in Hibari Sysadmin Guide, "Hibari Architecture" section.

The #hash_r record encapsulates two things:

- The algorithm used to choose what part of the key will be used for hashing: the entire key, fixed length prefix, variable length prefix, etc.

- The consistent hashing algorithm itself.

The #g_hash_r record is the "global hash record" for a table. It is

the record that is "spammed" to all brick servers and clients (see the

description of global hash spamming

the section called “Global Hash spamming”).

The #g_hash_r record contains the #hash_r records for:

- The current hash configuration

- The new hash configuration.

Usually, the current hash config is the same as the new hash config. However, when chains are added/removed/reweighted and a data migration takes place, the new hash configuration is used to determine which keys stay in their current chain and which need to be moved to a new chain.

In theory, all Hibari clients have access to an up-to-date copy of

each tables' #g_hash_r record, via their node-local brick_simple

server. In practice, due to message passing latencies, all clients do

not have correct global hashes 100% of the time. The Admin Server

also sends #g_hash_r updates to all server bricks also. If a client

is using an old global hash, the servers (using the same consistent

hash calculations) can forward the request to the correct brick.

The only method that should be used for new Hibari tables is the

chash method. The other three, naive, ``var_prefix`, and

fixed_prefix, are deprecated and will be removed at some point. The

chash method supports all three schemes and also provides

migration-related features that the three deprecated schemes alone

cannot.

See the EDoc entry for chash_init/3 for full details on all the

valid properties that can be passed in the 3rd argument proplist.

The two properties that are mandatory are prefix_method and

new_chainweights. We strongly advise that you also include the

old_float_map property; the float map can be extracted from a

#g_hash_r or a #hash_r record using the

chash_extract_new_float_map/1 function.

Do not use the |

The chain changing example in ??? shows how

to verify that the #hash_r that you’ve created will result in the

key distribution across chains that you desire. See the

example of using ``brick_simple:chash_migration_pre_check/2`

???.

Older versions of Hibari made substantial use of

timer:send_interval/2 for sending periodic timer messages. The

timer module’s implementation can be too inefficient when over 1,000

separate timer interval requests are made. The brick_itimer module

creates a more CPU-efficient implementation for heavily used timer

intervals, for example 1 second.

The latency jitter in delivering these shared timer messages is intentional. Strict real-time accuracy for sending these periodic messages is not required. If stricter delivery timings are required, do not use this module.

Hibari servers use asynchronous message passing in two major areas:

- Chain replication: sending events "downstream" to the next brick in a chain.

- Chain repair: sending key updates to bricks that have crashed and later restarted.

The number of these asynchronous messages can arrive more quickly than a brick can handle. Perhaps its CPU is overloaded, or perhaps disk I/O rates are so high that the hardware cannot provide adequate service times. In either case, the number of messages in a logical brick "gen_server" process mailbox can grow too large.

It is vital for good performance that a brick’s mailbox. Large

mailboxes can interfere with other messaging, for example, synchronous

calls to the brick’s write-ahead log process. If the mailbox grows

too large, the VM will spend 100% of a CPU core performing selective

receive operations on the mailbox. If the mailbox continues to grow

without limit, the entire VM can crash by consuming all virtual memory

available to the OS.

If a brick’s mailbox gets too big, then some kind of "pressure" mechanism is required to slow down message producers. In cases of mailbox overload during brick repair, repair operations by the upstream brick (with the "official tail" role) must slow down. In normal chain operations, the head brick must slow down its rate of updates.

The throttling mechanism implemented by brick_mboxmon.erl is

straightforward. Every 500 milliseconds, the mailbox size of each

brick on the local node is polled via erlang:process_info/2.

If the mailbox size exceeds the

brick_mbox_repair_high_waterattribute incentral.conf, and if the brick is under repair, then the throttle mechanism is activated.-

The repair process is stopped and will be restarted in

brick_mbox_repair_overload_resume_intervalseconds.

-

The repair process is stopped and will be restarted in

If the mailbox size exceeds the

brick_mbox_high_waterattribute incentral.conf, then the throttle mechanism is activated.- The head brick in the chain is flipped to "read-only mode". When in read-only mode, the head brick cannot process any updates, so the head brick cannot create new key updates that will eventually be sent to the overloaded brick.

When the overloaded brick’s mailbox size falls under

brick_mbox_low_water, then the brick is no longer considered overloaded.- In cases of repair overload, repair is restarted.

- In cases of normal chain replication overload, the head brick’s "read-only status" is turned off (i.e. updates are permitted again). For transient overload conditions lasting 0-3 seconds, client request buffering by the head brick is usually sufficient to avoid timeouts visible by Hibari clients. However, if the chain is so overloaded that client timeouts occur, then the client timeout mechanism itself will reduce the chain’s total workload.

The application log files on the overloaded brick, the head brick, and (in repair cases) the Admin Server will contain messages stating when and why the brick was considered overloaded and when the overload condition ended.

During times when a table’s chain configuration is changed

(e.g. chains added, removed, or reweighted), a "data migration" takes

place. Some keys are copied, or "migrated", from one chain to

another. The brick_migmon.erl module is responsible for monitoring

the overall status of this data migration period.

Data migrations are tied closely to a table’s global hash record.

Each Hibari table has its own global hash record (#g_hash_r

record). It is therefore possible to have multiple migrations running

simultaneously for multiple tables.

However, each data migration for a global hash is assigned a "cookie", which is an opaque Erlang term. In the event that some or all processes in the Admin Server crash, this cookie is used to distinguish between resuming an in-progress migration and starting a new migration. If migrations are numbered X, X+1+, X+2, etc., then a migration X+1 for table Y will not be permitted to start until migration X for table Y has finished.

Earlier versions of Hibari had difficulty with brick "pinger" processes getting timeouts when trying to check a logical brick’s health. Under extremely high workloads, the first-come, first-served nature of a "gen_server"'s message handling was not sufficient.

The solution is to create a separate "pingee" process for each logical brick. As the main brick "gen_server" process makes major state transitions, those transitions are transmitted to the "pingee" process. The "pinger" processes actually communicate with the "pingee" process for health inquiries and not with the main brick process. Because the "pingee" is only used for state transition and health check messages, its mailbox is almost always empty, and its process almost always idle. This combination helps make responses to health checks much quicker.

This module implements the Admin Server’s "scoreboard" process. The scoreboard reflects the health status of each logical brick and chain.

Accelerate read-only query performance, all scoreboard status is

maintained in RAM. To make the scoreboard resilient in case of

crashing, each status change is synchronously written to the Admin

Server’s private bootstrap_copy* bricks. See

Section 2.2, “Admin Server notes: crash-recovery design” for more info on crash-recovery

design. For more information about the Admin Server’s bootstrap

bricks, see Hibari

Sysadmin Guide, "Admin Server’s Private State: the Bootstrap Bricks"

section and

Hibari

Sysadmin Guide, "Bricks outside of chain replication" section.

Each status change event that is sent to the scoreboard includes a proplist that can contain additional information about the event. At this time, there is no requirement of mandatory properties in that proplist. Though mandatory properties may be introduced later, the main purpose is merely to provide a human developer/systems administrator with some extra information about the event.

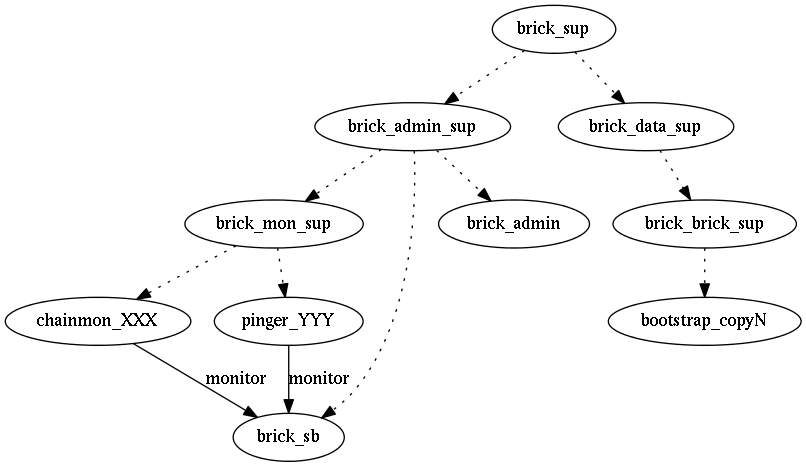

The scoreboard and it’s surrounding (partial) supervision tree is depicted above by "dotted" lines. The chainmon_XXX and pinger_YYY processes manually establish a link with the brick_sb process. If a "down event" for the brick_sb processed is received, the chainmon_XXX and pinger_YYY processes sleep for 1 second before exiting abnormally. The brick_mon_sup supervisor will then automatically restart the chainmon_XXX and pinger_YYY processes.

During initialization of the scoreboard, the brick_sb loads the “scoreboard” operational history into RAM from the bootstrap bricks via the brick_admin server. After initialization, the brick_sb manages the state in RAM and synchronously writes the full state directly to the bootstrap bricks after receiving new chain and/or brick status reports. To help improve performance, the implementation of the brick_sb is optimized to process all pending status reports as a batch and to then save the full state to the bootstrap bricks as a single write operation.

The scoreboard’s maximum length of the list of historical

events is hard-coded at 100. This should be changed to use a

|

The brick_server.erl module implements the storage-agnostic aspects

of a logical brick. It isn’t fully insulated from matters related to

ETS and disk write-ahead log, but it’s close. See the beginning of

the section called “brick_ets.erl” for more details of how brick_ets.erl started

and how brick_server.erl came along later.

See also the section called “"gen_server" nested inside a "gen_server", Matroshka-style”.

After the split of brick_ets.erl and brick_server.erl, the

"gen_server" callback functions of both were preserved, even though

both are used by a single process. As far as OTP is concerned,

brick_server.erl is the callback module used for the main logical

brick gen_server process. If a call, cast, or message isn’t handled

by brick_server's callback function, then the message is handled by

brick_ets's callback function.

The wrinkle in this otherwise flawless scheme is that

brick_server.erl has to maintain the #state record that

brick_ets.erl uses, keeping it completely separate from

brick_server.erl's record of the same name.

See ??? for examples that use the do()

client interface.

All of the basic do operations are encoded into a small number of

tuples. See make_op2(), make_op5(), and make_op6(). Note that

if you want to manage your own timestamps, rather than use ones based

on the OS system clock, you must use the make_op6() function

yourself. A more convenient API doesn’t yet exist because there’s

been no need for it … but it would be easy & quick to write.

By default, the various operations within a do call’s DoList do

not have any transaction semantics. If a DoList contains 5

operations, then there is almost no difference between sending that

DoList to a server than there is sending 5 different do

operations, each containing a single op. However…

there are two good reasons why an application developer might want to combine those 5 ops into a single

docall:- To reduce the total amount of time required to process the 5 ops.

To take advantage of the brick’s guarantee that no other ops (sent by another client) can be interleaved with the execution of those 5 ops.

- Note that this guarantee is not the same as the guarantees provided by micro-transactions.

There are two types of flags that can be sent with a do op:

Flags bundled in the

make_op* function, which affect only that particular operation.-

See the EDoc description of

encode_op_flags/1for valid op flag names.

-

See the EDoc description of

Flags that affect all ops in the

dolist.-

See the EDoc description of

do/5for validDoFlagsnames.

-

See the EDoc description of

The Admin Server maintains the table-specific settings for disk

logging and log flush parameters for all bricks in the table. By

default, both disk logging and log flushing are enabled. Both

features are actually implemented by brick_ets.erl, but the API to

change those parameters at runtime are handled through

brick_server.erl.

See set_do_logging/2 and set_do_sync.

The role management functions were designed for use by the Admin

Server to manage the brick lifecycle state machine. See comment

"Chain admin & related API" in -export statements at top of the

file.

See Hibari Sysadmin Guide, "Brick Lifecycle Finite State Machine" section for a description of a brick’s lifecycle within chain replication.

Management of the #chain_r record is tricky whenever a role is

changed. There are some attributes of the record that should be reset

to a default value and other attributes that must be preserved.

Examples of the latter are log serial numbers used by the brick as

well as serial numbers ack’ed downstream & upstream. Some role

transitions must also be reflected in the brick_pingee helper

process (see the section called “brick_pingee.erl”). The result looks more complex

than you’d first believe is necessary, but there isn’t much excess

code to remove: much of that complexity is necessary.

As described in the section called “brick_hash.erl”, the brick_hash.erl module is

used for consistent hashing calculations by Hibari clients. However,

because a client may be acting on old/stale data, each Hibari brick

also uses brick_hash to verify that each operation it receives

should be executed locally.

If a client sends a do call to the wrong brick, as calculated by the

brick’s global hash, then that brick will forward the do call to

what it believes is the correct brick. However, due to asynchronous

message passing, scheduling latencies, network latencies, etc., the

brick itself may have an old/stale version of the global hash. In

such a case, the do will be forwarded to the wrong brick. However,

because each brick will forward the do to where it believes the do

should go, eventually the do call will arrive at its proper

location. In the event that the forwarding takes too long, the client

will see a timeout.

The forwarding mechanism is limited by a couple of factors:

- Each forwarding increments a forwarding hop counter.

- After the first few forwarding hops, a geometrically-increasing sleep period is used before actual forwarding.

- After a limit of 18 forwarding hops, the query is dropped.

Each time the do call is forwarded, the SentAt time (which is

originally set by the client node) is reset to the current wall-clock

time. This reset is done to prevent brick_do_op_too_old_timeout

configuration attribute enforcement; see

Hibari

Sysadmin Guide, "NTP configuration of all Hibari server and client

nodes" section.

See Hibari Sysadmin Guide, "Chain Lifecycle Finite State Machine" section for background information.

Both chain replication protocol messages and client replies are sent "downstream", i.e. they are sent to the next brick in the chain.

The reply term for a do call is piggy-backed onto the chain

replication message that is sent down the chain. When a chain

replication protocol message reaches a brick has the "official tail"

role, then:

- The reply is sent to the client.

- If the brick has a downstream brick (i.e. there is a brick currently under repair that is downstream of the "official tail" brick), then the chain replication protocol message is sent downstream.

If the do operation has the ignore_role property in its DoFlags

property list, then the reply is sent directly to the client (instead

of the default behavior of being sent downstream with the chain

replication message).

The chain replication protocol messages are:

-

{ch_log_replay, UpstreamBrick, Serial, Thisdo_Mods, From, Reply} -

The

UpstreamBrickandSerialterms are used to verify that the message comes from the correct brick and that messages have not been sent out-of-order. TheThisdo_Modsterm contains the write-ahead log terms associated with this update (i.e. insert and delete commands), andFromandReplyare used to send theReplyterm to the client. -

{ch_serial_ack, Serial, BrickName, Node, Props} -

Once per second, the tail brick sends this message upstream. All

other bricks in the chain forward it upstream until it reaches the

head brick. All bricks in the chain keep track of the

Serialnumber in these messages and purge from their in-memory buffers all log replay requests with serial numbers less than or equal toSerial: these replay requests are no longer required to recover from failure of a middle brick.

See the section called “Log flushing and the sync pid and the logging_op_q” for background on the write-ahead log serial number’s use and management.

There is a lot of code in chain_send_downstream_iff_empty_log_q/6

and elsewhere to make certain that log events are sent downstream

without violating ordering constraints. I confess it isn’t pretty,

but all of the bugs that I know of have been wrung out. If there are

still bugs (and I suspect but cannot prove that there is one more),

the paranoid sanity checking done by the downstream/receiving brick

will crash if Serial numbers are received out-of-order. It isn’t a

pretty way to recover from such an error, but it’s the safest reaction

that I know of.

The chain repair protocol has been implemented twice. The first

protocol was a brute-force, "as simple as possible" affair. The

upstream brick would send a series of {ch_repair, Serial,

RepairList} messages to the repairing brick. RepairList contained

a list of store tuples (see the section called “The "store tuple"”) for all keys in the

table. Note that these store tuples will always contain the full

value blob.

The first protocol was deprecated after it became clear that "as simple as possible" had too much overhead. When in-RAM storage of value blobs was the only option, then it was cheap to fetch the value blobs and send them across the network to the repairing brick. But when "bigdata" storage was introduced (see the section called “Value blob storage on disk: bigdata_dir”), then the disk I/O, network bandwidth, and total latency became far too high to be practical.

The second chain repair protocol uses two rounds of messages to avoid the I/O problems caused by the first protocol:

- First round: The upstream sends a list of keys, a subset of all keys stored by the brick. The downstream replies with the list of keys that it does not have copies of.

- Second round: If the downstream does not need any keys, this round is skipped. Otherwise, the upstream sends the downstream the store tuples (including value blob) for only the keys that the client requested in round 1.

The following messages are exchanged:

-

{ch_repair_diff_round1, Serial, RepairList} -

The 1st round message sent by the upstream brick. Only key and

timestamps are included in

RepairList. -

{ch_repair_diff_round1_ack, Serial, BrickName, Node, Unknown, Ds} This is the downstream brick’s response to the

{ch_repair_diff_round1, ...}message. TheUnknownterm is a list of keys that are missing from the downstream brick (completely missing or timestamp mismatch). TheDsinforms the upstream brick of how many keys were deleted by the downstream brick.If the brick’s value blobs are stored on disk, then an asynchronous "priming" mechanism is used by the upstream brick to force those blobs into RAM before processing sending the 2nd round.

-

{ch_repair_diff_round2, Serial, RepairList, Ds} -

The upstream brick sends this message with

RepairListcontaining all store tuples (with value blobs) for all keys requested in round 1. TheDsterm is not used. -

{ch_repair_ack, Serial, BrickName, Node, Inserted, Deleted} -

The downstream brick sends this message in both the old and new repair

protocol versions.

InsertedandDeletedcount the number of keys that were inserted and deleted into the repairing brick, respectively. -

{ch_repair_finished, Brick, Node, Checkpoint_p, NumKeys} -

The upstream brick sends this message when the round 1 messages have

iterated over all keys. When received, the downstream brick will move

itself from the

repairingstate to theokstate. The brick "pinger" process will notice this state transition and trigger further chain role changes.

While these repair protocol messages are exchanged, the upstream brick

will send all {ch_log_replay,...} chain replication messages as

updates occur. On the upstream brick, the {ch_repair_diff_round1,

Serial, RepairList} is created without interference from client

updates; any keys not in RepairList are immediately deleted by the

downstream brick before replying with the

{ch_repair_diff_round1_ack,...} message. Therefore, any possible

race conditions involving client updates of keys within the

RepairList range of keys are resolved correctly by the log serial

mechanism, because only one of two races can happen inside the

upstream brick:

-

The client update

{ch_log_replay,...}message is sent before the upstream creates and sends the round 1 repair message. Any keys updated in the log replay message are guaranteed to be included in the round 1 repair message. -

The client update

{ch_log_replay,...}message is sent after the upstream creates and sends the round 1 repair message. Any keys updated in the log replay message are guaranteed to not be included in the round 1 repair message. Replay of the replay message can happen safely at any time (as long as serial number ordering is preserved).

The data migration mechanism is used to move keys from one chain to another when chains are added, removed, or reweighted. The task of moving keys while maintaining strong consistency is a delicate business. The protocol, which uses two rounds (or phases), described below is used to move keys safely between chains.

During migration, the head of each chain maintains a "sweep key pointer". This pointer moves through the keys, first to last (in lexicographic sorting order).

- Keys that are "in front" of the sweep key, i.e. keys that are larger than the sweep key, have not yet been scanned by the migration algorithm.

- Keys that are "behind" the sweep key, i.e. keys that are smaller than the sweep key, have been scanned by the migration algorithm.

The sweep key advances through the head brick’s keys, advancing by a

maximum of max_keys_per_iter configuration attributed in

central.conf or a maximum of 64MB of blob values (hardcoded for now

in get_sweep_tuples/4. These keys are what the code calls the

"sweep zone": those keys between the sweep key’s current value and the

sweep key’s value from the last successful iteration of the migration

protocol.

-

{ch_log_replay,...}message with{plog_sweep,phase1_sweep_info,#sweepcheckp_r}modification inside -

If the chain length is > 1, the head brick must inform all bricks in

the chain where the new sweep key is located. For chains of length 1,

this first phase is not required. All bricks record the sweep info in the

sweepcheckp_rrecord into its private brick metadata: the head brick before sending the message, all other bricks when receiving the message. The private metadata is used to recover vital state in case the head brick crashes. NOTE: This migration sweep metadata is called a sweep checkpoint and is not related to a brick key checkpoint. -

{sweep_phase1_done,LastKey} -

Sent by the tail of the chain back to the head, acknowledging that all

bricks in the chain have seen the

{plog_sweep,phase1_sweep_info,...}message. -

{ch_sweep_from_other, ChainHeadPid, ChainName, Thisdo_Mods, LastKey} -

This message starts the second phase of migration. The head brick has

calculated which keys must be moved to a new chain. This message is

sent to the head of the new chain. The

Thisdo_Modscontains the list of store tuples that are moving to the receiving brick; this list is sent down the chain using the usual chain replication protocol.LastKeyspecifies where the sweep key location for theChainNamechain. One of these messages will be sent to each chain that stores keys within the sweep zone; call the number of chains X. -

{sweep_phase2_done, Key, PropList} -

When the modifications from the second phase’s

{ch_sweep_from_other,...}message have reached the tail of the new chain, this message is sent to the head of the old chain to acknowledge that the migrated keys are now fully replicated on the new chain. When the head brick receives{ch_sweep_from_other,...}messages from all X chains, then the second phase of migration is finished.

In between round 1 and round 2 of a migration sweep iteration, the

same value blob "priming" technique is used to prevent disk I/O from

blocking the brick’s gen_server process. See sweep_move_or_keep/3

and spawn_val_prime_worker_for_sweep/3.

"SSF" stands for "Server-Side Fun". An SSF is an Erlang fun that is

created by a Hibari client and executed on a Hibari server brick. The

SSF has the ability to rewrite the DoList of operations in the do

call based on the ability to examine the brick’s internal state.

In the end, the SSF cannot do anything that cannot be done with multiple queries to a brick. For example, here is a simple two-query scheme to update simple counter value in a race-safe manner:

Incrementing a counter.

{ok, TS, OldValBin} = brick_simple:get(TableName, Key),

OldVal = binary_to_term(OldValBin),

ok = brick_simple:replace(TableName, Key, term_to_binary(OldVal + 1),

[{testset, TS}]),

%% Use OldVal in code below this point.

An SSF would use a very similar bit of logic and would create the same

replace operation.

Incrementing a counter with an SSF.

F = fun(Key, _DoOp, _DoFlags, S) ->

[{_Key, TS, Val, _Exp, _KeyFlags}] = brick_server:ssf_peek(Key, true, S),

OldVal = binary_to_term(Val),

{ok, [brick_server:make_replace(Key, term_to_binary(OldVal + 1), 0,

[{testset, TS}]),

{current_val, OldVal}]}

end,

Op = brick_server:make_ssf(Key, F),

[ok, {current_val, OldVal}] = brick_server:do(TableName, [op]),

%% Use OldVal in code below this point.

The SSF fun creates a list of do primitive operations, in this case

two operations:

-

A

replaceoperation to update the key - A "pass-through" 2-tuple to tell the client the current value of the counter. Because this 2-tuple isn’t a valid brick operation, the term is returned to the client as-is.

Here is an example that uses the SSF above. It assumes that the shell

variable F has been bound to the fun above. A cut-and-paste of the

code above will work well, assuming that F is not already bound to a

shell variable and that the final "end," is replaced with "end.".

(hibari_dev@bb3)13> brick_simple:set(tab1, "c1", term_to_binary(0)). ok

(hibari_dev@bb3)14> Op = brick_server:make_ssf("c1", F).

{ssf,<<"c1">>,[#Fun<erl_eval.4.105156089>]}(hibari_dev@bb3)15> brick_simple:do(tab1, [Op]).

[ok,{current_val,0}](hibari_dev@bb3)16> brick_simple:do(tab1, [Op]).

[ok,{current_val,1}]The SSF is executed to create a list of For example, here is a case where the timestamp of the key has been

modified in a race with another client. Note that the 2nd element in

the return term, (hibari_dev@bb3)21> brick_simple:do(tab1, [Op2]).

[{ts_error,1272654442441669},{current_val,2}] |

The API of the SSF is a work-in-progress. It is used by one internal Cloudian project but otherwise does not have strong backward-compatibility requirements. |

The API of the SSF is a work-in-progress. It is used by one internal Cloudian project but otherwise does not have strong backward-compatibility requirements. |

The implementation of SSFs (server-side funs) on the server side of

the world is an experiment using a general framework for modifying

do operations on the client side before they are executed. This

experiment is implemented by the combination of:

-

The

brick_preprocess_methodconfiguration attributed incentral.conf. -

The

handle_call_do/3andpreprocess_fold/4functions. -

Individual functions in the

#state.do_list_preprocesslist.

The current implementation allows the output of the SSF to replace the

{ssf, Key, Fun} tuple inside the do call’s DoList list of

operations. It does not permit the arbitrary modification of the

entire DoList list, nor does it permit examination of other

operations in the DoList. The reasoning for the limitation is that

all DoList items ultimately come from a single client in a single

do operation; if the client wanted to reorder things arbitrarily,

the client has the power to do that before sending the do call.

When an MD5 checksum error is detected by brick_ets.erl or one of

the write-ahead log modules, dealing with the error is a bit complex:

-

Data structures maintained by

brick_ets.erlrequire changes. - The error may be inside a file in the "common log", the part of the write-ahead log shared by all bricks on the node. In this case, all bricks must be notified that a file in the common log is bad.

The common_log_sequence_file_is_bad/3 function is used by

gmt_hlog_common.erl to notify each brick when an MD5 checksum error

is found.

The checkpoint operation is implemented by brick_ets.erl, but the

API options are documented in the EDoc for

brick_server:checkpoint/3.

See the section called “ETS tables” and the section called “Processes created by brick_ets.erl” for more information information about checkpointing.

The implementation of the scavenger is spread across both

two modules. As a general rule, the low-level key scanning and hunk

copying is done by functions in brick_ets.erl, while the

higher-level coordination is implemented in gmt_hlog_common.erl.

One property worth noting here is the destructive option. If this

property is false, then the scavenger will read keys but not do

anything to relocate the keys. When used in combination with

{skip_live_percentage_greater_than,100}, the scavenger can verify

the MD5 checksums of all value blobs by reading all value blob hunks

in sorted, log file sequence order.

The scavenger can be halted manually using

gmt_hlog_common:stop_scavenger_commonlog/0 and resumed using

gmt_hlog_common:resume_scavenger_commonlog/2.

One of the design ideas behind the scavenger is that it should try to

avoid random I/O as much as possible. The scavenger uses the same

write-ahead log as everyone else, and since the write-ahead log always

uses sequential file writes and does a decent job of batching multiple

writes with a fewer number of fsync(2) calls, write I/O is mostly

sequential. However, the scavenger’s read I/O should also be as

sequential as possible … so the scavenger goes through a lot of

effort to read all hunks from a single log file at the same time and

in sequential offset order.

The sequential read property introduced at

the section called “Scavenger and code reuse” can be useful in other areas also.

For example, the "fast sync" utility in brick_admin.erl.